MicroShift enables dynamic storage provisioning with the Logical Volume Manager Storage (LVMS) provider. The LVMS plugin is a Container Storage Interface (CSI) plugin for managing LVM volumes for Kubernetes. LVMS provisions new logical volume management (LVM) and logical volumes (LVs) for container workloads with persistent volume claims (PVC). Each PVC references a storage class that represents an LVM Volume Group (VG) on the host node.

Prerequisites

- MicroShift 4.13 installed on RHEL 9.2. Please refer Microshift 4.13 installation on RedHat Enterprise Linux 9.2 for further details.

Configuration

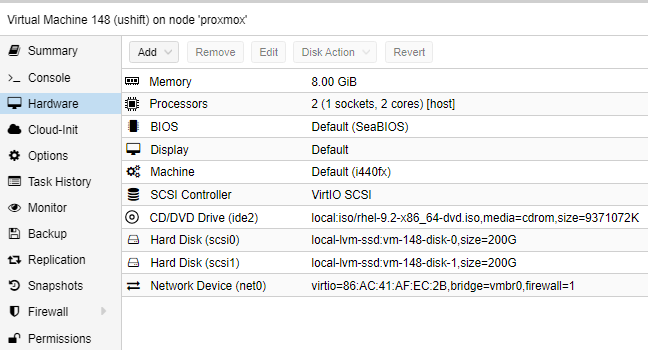

Add an extra hard disk for dynamic storage provisioning using LVMS. Below is a screen shot of my machine configuration. MicroShift is using second hard disk (scsi1) for LVMS plugin.

Create a volume group

Check the physical volumes in the machine by running the following command.

pvsTerminal output for reference

[root@localhost hyrule]# pvs

PV VG Fmt Attr PSize PFree

/dev/sda2 rhel lvm2 a-- <199.00g 0Create a PV. Change physical volume name accordingly by inspecting /dev folder in the system. In my case its /dev/sdb.

pvcreate /dev/sdbTerminal output for reference

[root@localhost hyrule]# pvcreate /dev/sdb

Physical volume "/dev/sdb" successfully created.

[root@localhost hyrule]# pvs

PV VG Fmt Attr PSize PFree

/dev/sda2 rhel lvm2 a-- <199.00g 0

/dev/sdb lvm2 --- 200.00g 200.00gCreate a new volume group

vgcreate hyrule/dev/sdb Terminal output for reference

[root@localhost hyrule]# vgcreate hyrule/dev/sdb

Volume group "hyrule" successfully createdCheck the pvs in the machine.

[root@localhost hyrule]# pvs

PV VG Fmt Attr PSize PFree

/dev/sda2 rhel lvm2 a-- <199.00g 0

/dev/sdb hyrule lvm2 a-- <200.00g <200.00gLVMS Configuration

Create an LVMS configuration file. When MicroShift runs, it uses LVMS configuration from /etc/microshift/lvmd.yaml. Make sure that volume-group ‘hyrule’ is created.

sudo cp /etc/microshift/lvmd.yaml.default /etc/microshift/lvmd.yamlEdit /etc/microshift/lvmd.yaml.

# Unix domain socket endpoint of gRPC

socket-name: /run/lvmd/lvmd.socket

device-classes:

# The name of a device-class

- name: default

# The group where this device-class creates the logical volumes

volume-group: hyrule

# Storage capacity in GiB to be spared

spare-gb: 0

# A flag to indicate that this device-class is used by default

default: true

# The number of stripes in the logical volume

#stripe: ""

# The amount of data that is written to one device before moving to the next device

#stripe-size: ""

# Extra arguments to pass to lvcreate, e.g. ["--type=raid1"]

#lvcreate-options:

#- ""Restart MicroShift

sudo systemctl start microshiftTesting

Create a namespace

oc create ns testCreate test.yaml and copy below contents.

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: my-lv-pvc

namespace: test

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1G

---

apiVersion: v1

kind: Pod

metadata:

name: my-pod

namespace: test

spec:

containers:

- name: nginx

image: quay.io/jitesoft/nginx

command: ["/bin/sleep", "1d"]

volumeMounts:

- mountPath: /mnt

name: my-volume

securityContext:

allowPrivilegeEscalation: false

capabilities:

drop:

- ALL

runAsNonRoot: true

seccompProfile:

type: RuntimeDefault

volumes:

- name: my-volume

persistentVolumeClaim:

claimName: my-lv-pvcCreate pvc and pod

oc create -f test.yamlCheck the status of pod, pvc and pv.

[hyrule@localhost test]$ oc get po -n test

NAME READY STATUS RESTARTS AGE

my-pod 1/1 Running 0 2m7s

[hyrule@localhost test]$ oc get pvc -n test

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

my-lv-pvc Bound pvc-1d6e6430-b052-4c4b-8ff2-df3b23dd9160 1Gi RWO topolvm-provisioner 2m13s

[hyrule@localhost test]$ oc get pv -n test

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pvc-1d6e6430-b052-4c4b-8ff2-df3b23dd9160 1Gi RWO Delete Bound test/my-lv-pvc topolvm-provisioner 2m19s